After the first weekend of basketball, our Machine Learning Prediction tool has good results.

We had two measures of success: We wanted to win at least 46% of our picks and we wanted to “win” using virtual money bet on the money lines. By both measures, we had success: We correctly picked 6 upsets out of the 13 games we chose (46%) and we won $1,359 off the 6 correctly picked upsets (profit of $59 on $1300 laid ($100 per game) or 5% ROI).

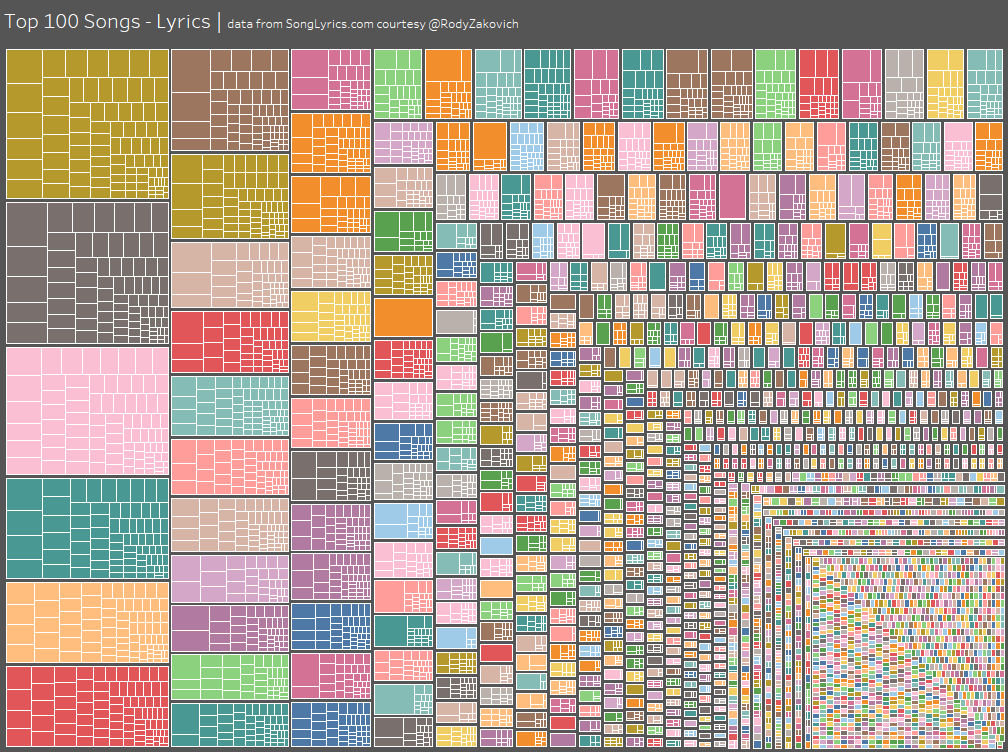

The details:

Overall there were 10 instances where the lower seed won in the first two rounds. This year is on track for fewer lower seeds winning (22%) than the historic rate (26%). So even with “tough headwinds” we still came close to our expectations.

“But CAN, there were multiple lower seed winning that you didn’t pick. Why didn’t the model see Middle Tennessee upsetting Minnesota?” The answer is simple, MT winning was a result of variables that we weren’t measuring. Our picks were based on games that matched our criteria were based on variables found in most (not all) of the games in which the lower seed won in past years. Lower seeds can and will still win, our model was built to predict the highest number of upsets without over picking. This is actually the perfect example of a model, even great ones, will not predict all. However, most, even some, in business, can mean huge revenue increases or monies saved.

Besides we had some really, really close calls that would have put us way, way ahead. There were several games where we had that the lower seed having a good chance of winning and they simply lost (Both Wichita State and Rhode Island had the games tied with under a minute to go). We picked multiple games where the money lines showed Vegas gave no chance of the upset, yet the teams came very close. Our play was to choose games that match the criteria and spread the risk over several probable winners. This wasn’t about picking the only upsets or all of the upsets, this was about picking a set of games that had

Our goal was to not choose games in a vacuum (which is how you bet), but instead to choose games that match the criteria and spread the risk over several probable winners. This wasn’t about picking the only upsets or all of the upsets, this was about picking a set of games that had the highest probability of the lower seed winning. And by our measures of success, we achieved our goal.

We aren’t done quite yet either.

For the next round, we have 5 games that match our criteria:

Wisconsin over Florida

South Carolina over Baylor

Xavier over Arizona

Purdue over Kansas

Butler over North Carolina

**If any games match our predictive criteria in the next round, we’ll post them Saturday before tip-off.

The results of the first rounds:

The Machine Learning algorithm performed as advertised: It identified a set of characteristics from historic data that was predictive of future results. The implications for any business is clear: if you have historic data and you leverage this type of expertise, you can predict the future.

For more information about how we created the Machine Learning algorithm and how we are keeping score, you may read the Machine Learning article here:

http://can2013.wpengine.com/machine-learning-basketball-methodology

If you would like to see how Machine Learning could improve your business, please feel free to reach out to either of us: this can relate to your business contact Gordon Summers of Cabri Group (Gordon.Summers@CabriGroup.com) or Nate Watson of CAN (nate@canworksmart.com).